In the preceding examples, agents could read values out of a ValueLayer and immediately add changes to these values. We addressed the issue of synchronization across processes, but depending on the simulation, another issue might need to be addressed, which we will term Synchronous Updating.

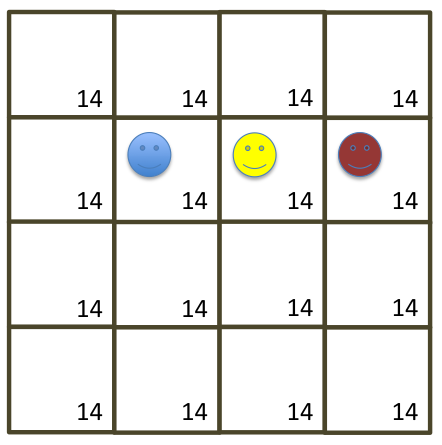

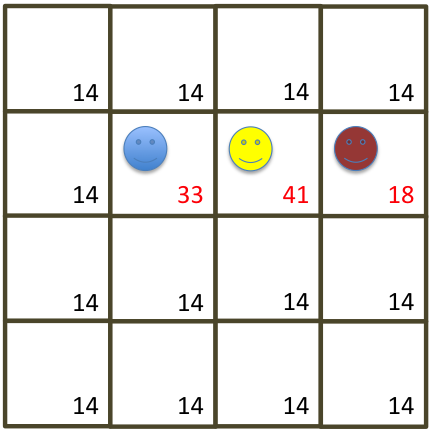

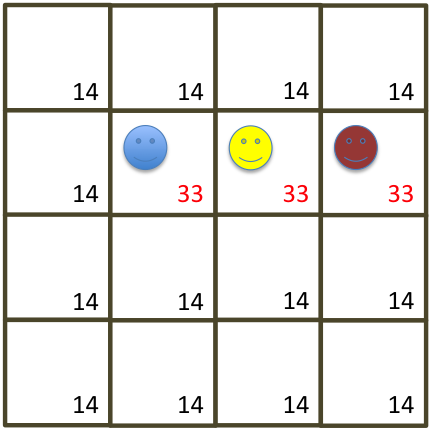

Synchronous Updating refers to a process where all of the current values in the ValueLayer must remain available even as new values are being calculated from them. Consider the following situation, in which three agents (from left to right, blue, yellow, and red) are on a value layer. Initially all the cells in the value layer have the same value, '14':

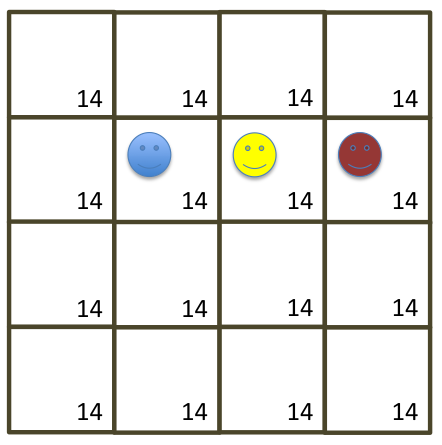

Suppose that the three agents must do something based on the values they see in their own and adjacent cells, and that they will then update their own cell's value based on this. 'Blue' goes first, and calculates the new cell value of 33:

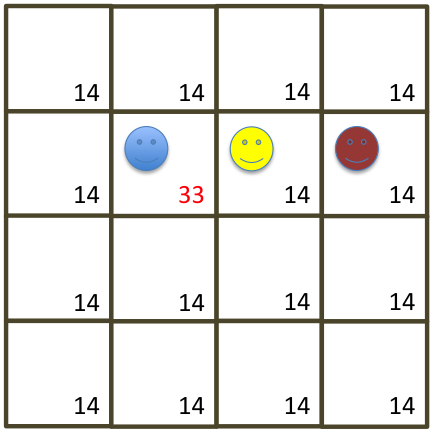

'Yellow' goes next, but because there is now a '33' in the adjacent cell, it calculates a different value, 41:

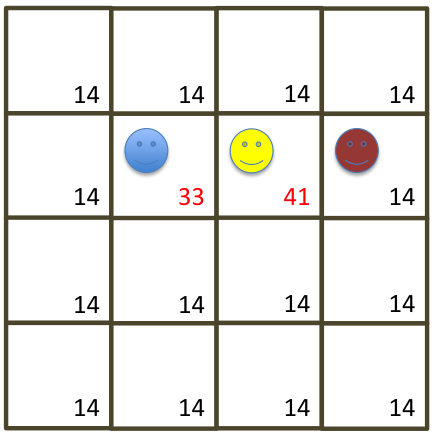

'Red' moves last, and, because it is now using even a different value, calculates its new value to be 18:

This may be perfectly acceptable, and may even be desirable for some simulations. However, for others, it may be incorrect for three agents starting at the same time and looking at the same values- '14'- to come up with different answers. Also note that the order in which the agents act signficantly changed the values they each arrived at.

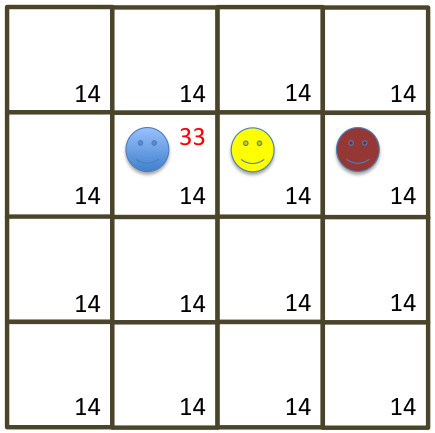

This kind of algorithm is called Asynchronous Updating, because the values in the value layer are being updated one-by-one instead of at the same time. By contrast, consider a different approach. First, 'blue' performs its action, and it calculates the new value but only stores it temporarily, instead of actually changing it:

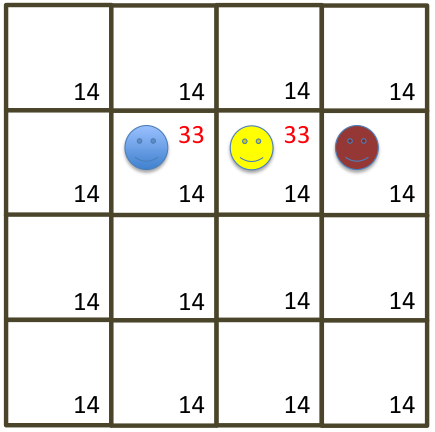

Next, 'Yellow' does the same, but because it is working from the same original values, it arrives at the same result:

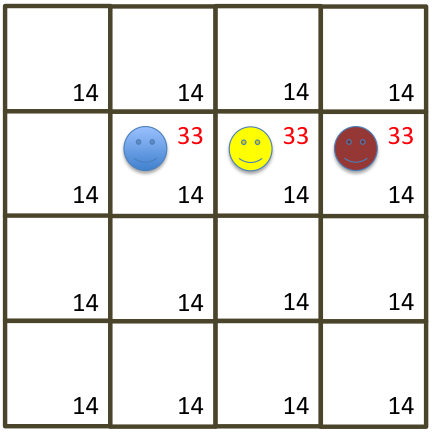

'Red' follows the same procedure:

After all of the agents have performed their calculations, all of the values are updated at the same time ('synchronously'):

The typical implementation for Synchronous Updating uses two separate value layers; one of them is 'current' and one is 'next'; when the calculations on the 'current' layer are complete and are stored in the 'next' layer, the roles are switched. (This is usually done by swapping pointers; this is much faster than copying all of the values from one into the other.)

Repast HPC provides a single class that handles these operations for the user. It is called the ValueLayerNDSU ('Value Layer, N-Dimensional, Synchronous Updates'). To demonstrate it, we will change our simulation from one in which agents make their changes in sequence, to one in which agents make changes to the 'next' layer while leaving the current values unchanged.

The ValueLayerNDSU includes a set of methods that perform operations on both value layers; the operations that act on the 'current' value layer are the same as in the ValueLayerND, and the operations that act on the 'next' layer are named to match, but operate on the 'secondary' value layer, hence:

| ValueLayerND: | ValueLayerNDSU: |

|---|---|

|

|

We can modify our demo example to make use of these. First, we must create a ValueLayerNDSU instead of a ValueLayerND. In Model.h:

class RepastHPCDemoModel{

int stopAt;

int countOfAgents;

repast::Properties* props;

repast::SharedContext<RepastHPCDemoAgent> context;

RepastHPCDemoAgentPackageProvider* provider;

RepastHPCDemoAgentPackageReceiver* receiver;

repast::SVDataSet* agentValues;

repast::SharedDiscreteSpace<RepastHPCDemoAgent, repast::WrapAroundBorders, repast::SimpleAdder<RepastHPCDemoAgent> >* discreteSpace;

repast::ValueLayerNDSU<double>* valueLayer;

And in Model.cpp:

RepastHPCDemoModel::RepastHPCDemoModel(std::string propsFile, int argc, char** argv, boost::mpi::communicator* comm): context(comm){

props = new repast::Properties(propsFile, argc, argv, comm);

stopAt = repast::strToInt(props->getProperty("stop.at"));

countOfAgents = repast::strToInt(props->getProperty("count.of.agents"));

initializeRandom(*props, comm);

if(repast::RepastProcess::instance()->rank() == 0) props->writeToSVFile("./output/record.csv");

provider = new RepastHPCDemoAgentPackageProvider(&context);

receiver = new RepastHPCDemoAgentPackageReceiver(&context);

repast::Point<double> origin(-100,-100,-100);

repast::Point<double> extent(200, 200, 200);

repast::GridDimensions gd(origin, extent);

std::vector<int> processDims;

processDims.push_back(2);

processDims.push_back(2);

processDims.push_back(2);

valueLayer = new repast::ValueLayerNDSU<double>(processDims, gd, 2, true, 10, 1);

Notice that there is no change to the structure of the arguments to the constructor (though for this demo we will initialize all cells to '10' instead of '0'). After this the Agent class must be changed. The first changes are to the method signature for the 'process values' method. In Agent.h:

/* Actions */

bool cooperate(); // Will indicate whether the agent cooperates or not; probability determined by = c / total

void play(repast::SharedContext<RepastHPCDemoAgent>* context); // Choose three other agents from the given context and see if they cooperate or not

void move(repast::SharedDiscreteSpace<RepastHPCDemoAgent, repast::WrapAroundBorders, repast::SimpleAdder<RepastHPCDemoAgent> >* space);

void processValues(repast::ValueLayerNDSU<double>* valueLayer, repast::SharedDiscreteSpace<RepastHPCDemoAgent, repast::WrapAroundBorders, repast::SimpleAdder<RepastHPCDemoAgent> >* space);

And in Agent.cpp, the method signature must be changed to match, and the Agent updated to use the new class appropriately:

void RepastHPCDemoAgent::processValues(repast::ValueLayerNDSU<double>* valueLayer, repast::SharedDiscreteSpace<RepastHPCDemoAgent, repast::WrapAroundBorders, repast::SimpleAdder<RepastHPCDemoAgent> >* space){

std::vector<int> agentLoc;

space->getLocation(id_, agentLoc);

std::cout << " " << id_ << " " << agentLoc[0] << "," << agentLoc[1] << "," << agentLoc[2] << " SPACE: " << space->dimensions() << std::endl;

std::vector<int> agentNewLoc;

agentNewLoc.push_back(agentLoc[0] + (id_.id() < 7 ? -1 : 1));

agentNewLoc.push_back(agentLoc[1] + (id_.id() > 3 ? -1 : 1));

agentNewLoc.push_back(agentLoc[2] + (id_.id() > 5 ? -1 : 1));

bool errorFlag1 = false;

bool errorFlag2 = false;

double originalValue = valueLayer->getValueAt(agentLoc, errorFlag1);

double nextValue = valueLayer->getValueAt(agentNewLoc, errorFlag2);

if(errorFlag1 || errorFlag2){

std::cout << "An error occurred for Agent " << id_ << " in RepastHPCDemoAgent::processValues()" << std::endl;

std::cout << " " << errorFlag1 << " " << errorFlag2 << std::endl;

std::cout << " " << id_ << " " << agentLoc[0] << "," << agentLoc[1] << "," << agentLoc[2] << std::endl;

std::cout << " " << valueLayer->getLocalBoundaries() << std::endl;

return;

}

if(originalValue < nextValue && total > 0){ // If the likely next value is better

valueLayer->addSecondaryValueAt(total-c, agentLoc, errorFlag1); // Drop part of what you're carrying

std::cout << "Agent " << id_ << " dropping " << (total - c) << " at " << agentLoc[0] << "," << agentLoc[1] << "," << agentLoc[2] << " for new value " << valueLayer->getSecondaryValueAt(agentLoc, errorFlag1) << std::endl;

total = c;

}

else{ // Otherwise

total += originalValue; // Pick up whatever was in the value layer

valueLayer->setSecondaryValueAt(0, agentLoc, errorFlag2); // Value Layer is now at zero

std::cout << "Agent " << id_ << " picking up " << originalValue << " at " << agentLoc[0] << "," << agentLoc[1] << "," << agentLoc[2] << " for new value " << valueLayer->getSecondaryValueAt(agentLoc, errorFlag2)<< std::endl;

}

}

Notice that the get methods all use the original form, and are looking at the current value layer, but the set methods (set, add) point to the new value layer. This means that all the assessments of the current state of the value layer by all agents will be done on the original values, not on the values as modified by the other agents.

After this, there is one other change that must be made: the 'switch' from the original values in the 'current' layer to the new values in the secondary layer must be performed using the 'ValueLayerNDSU::switchValueLayer() method. In the 'doSomething' method of the model class:

valueLayer->copyCurrentToSecondary(); // Destroy the old values in the old layer and replace with the new values

bool doWrite =(repast::RepastProcess::instance()->getScheduleRunner().currentTick() == 2.0);

if(doWrite) valueLayer->write("./","TEST_BEFORE_PROCESS",true);

it = agents.begin();

while(it != agents.end()){

(*it)->processValues(valueLayer, discreteSpace);

it++;

}

if(doWrite) valueLayer->write("./","TEST_BEFORE_SWITCH",true);

valueLayer->switchValueLayer(); // Begin using the new values

if(doWrite) valueLayer->write("./","TEST_AFTER",true);

std::cout << " VALUE LAYER SYNCHRONIZING " << std::endl;

valueLayer->synchronize();

std::cout << " VALUE LAYER DONE SYNCHRONIZING " << std::endl;

There are two very important caveats to using the synchronous update value layer. First, when synchronization is called, it only applies to the current value layer. The secondary value layer is not synchronized. To synchronize the secondary value layer, call the 'switch' method, and then call synchronize.

Second, 'switchValueLayer' does not change the values in either value layer. This means that under certain circumstances, to initialize the process, you must call 'copyCurrentToSecondary', to fill the secondary layer with the values from the first, so that any cells that are not changed by the algorithm contain the correct (original) values when all of the agents have acted. (The exception is when you are moving through all the cells and know that the value in the secondary array will be overwritten for all of them.)

As in the previous demo, you can set the time tick in the temporary code to choose a point at which to write the output files. In this case, the first file will be written from the original layer before the agents perform their processes; the second will be after the agents have processed, but the output is still from the primary layer, where no changes have been made. After the 'switch' takes place, the third file is written, this time from what was the secondary layer (now the new primary layer), and it shows the changes that the agents made.